Introduction

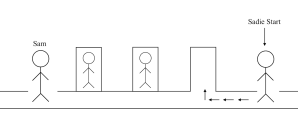

Embark on a strategic escapade as Sadie Green in Omission, navigating from the game room to the nurse’s desk. Your mission: log community service hours without being caught by Sam. With each passing second, your logged hours diminish, along with the prospects of earning the community service award. Most too fast, and you may alert Sam, ending the service project.

Why Remediate Tomorrow & Tomorrow & Tomorrow?

Although there are several games woven into the narrative of Gabrielle Zevin’s Tomorrow and Tomorrow and Tomorrow that we could have remediated, we wanted to create a new game based on the central relationship in the novel. The book follows thirty years of conflicts between gamemakers Sam and Sadie, beginning when they met as children in a hospital game room. It was during these formative years, through shared gameplay and blossoming friendship, that Sadie covertly logged her time with hospitalized Sam as community service hours–a fact she kept from him.

The months of lying by omission lead to their first major conflict, shaping the beginning chapters of the novel and hinting toward the future of their relationship. We chose to use the community service conflict as the foundation for our game, believing that it reflects the pivotal moments that will define and drive their relationship forward, both personally and professionally.

Game Design Process

Revisiting Tomorrow and Tomorrow and Tomorrow – Our initial step in the game design process involved re-reading the beginning chapters of Tomorrow and Tomorrow and Tomorrow by Gabrielle Zevin. It was essential to re-familiarize ourselves with the characters Sam and Sadie during their time in the hospital. The game adopts young Sam’s perspective on the community service conflict yet paradoxically casts the player as Sadie. Essentially, we wanted to make the game as if Sam was the creator trying to understand Sadie’s actions in her lying by omission ‘game.’

Upon discovering that Sadie had been logging their time together as community service hours, Sam’s initial response was to shut her out, feeling reduced to a mere means to an end rather than a true friend. Although he grew out of this mindset, we used his initial perspective to make our game competitive. We integrated a countdown mechanic into the game, where the longer Sadie takes to beat the level, the more community service hours she loses. The countdown begins at 609 hours – the number of hours Sadie records in the novel. This mirrors Sam’s perception of Sadie’s priorities––questioning whether her intentions stemmed from genuine friendship or a mere tally of hours. Understanding the nuances of their relationship and their personalities at our early design stages was crucial for accurately translating their dynamic into our game’s narrative and elements.

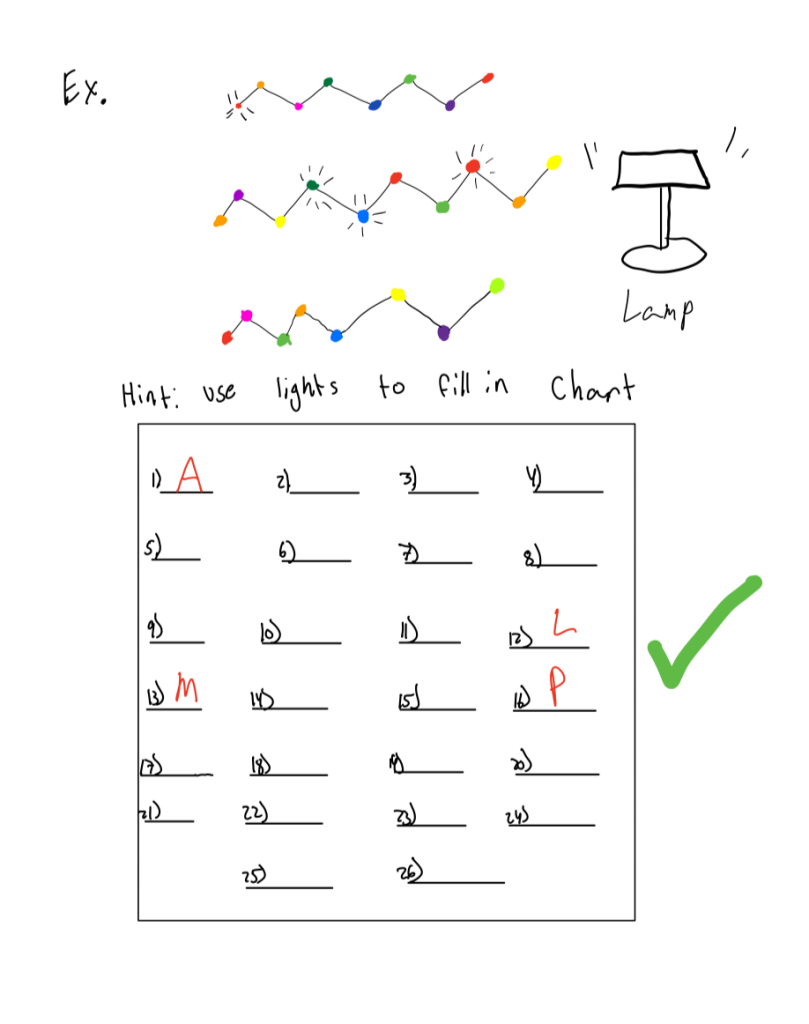

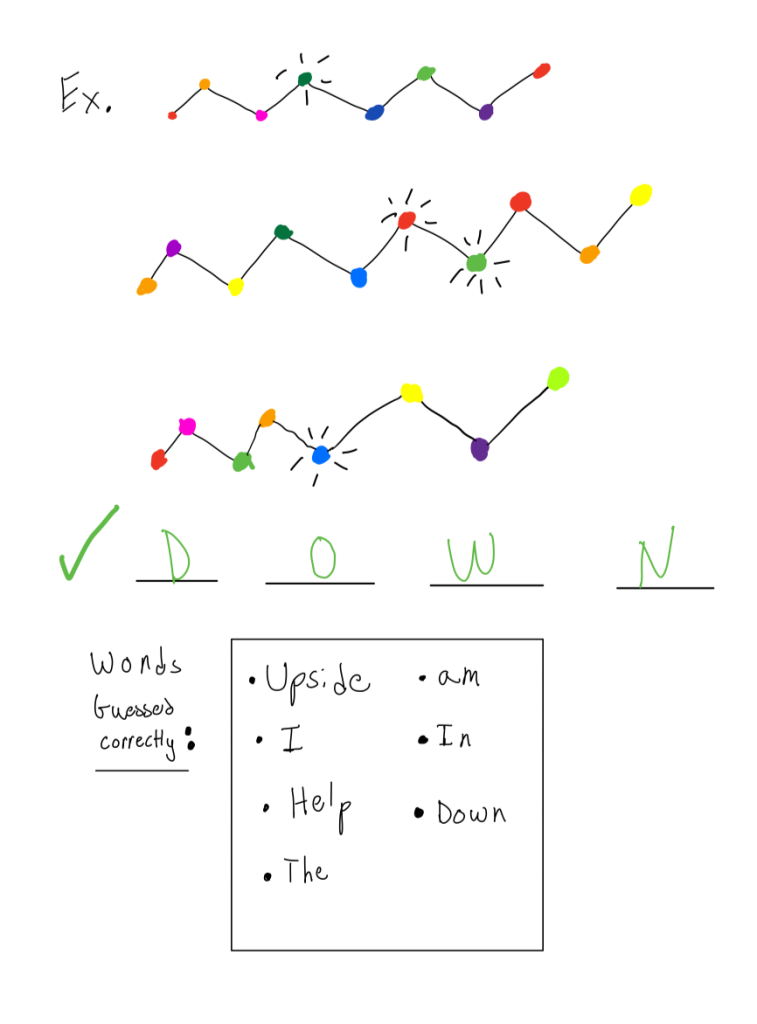

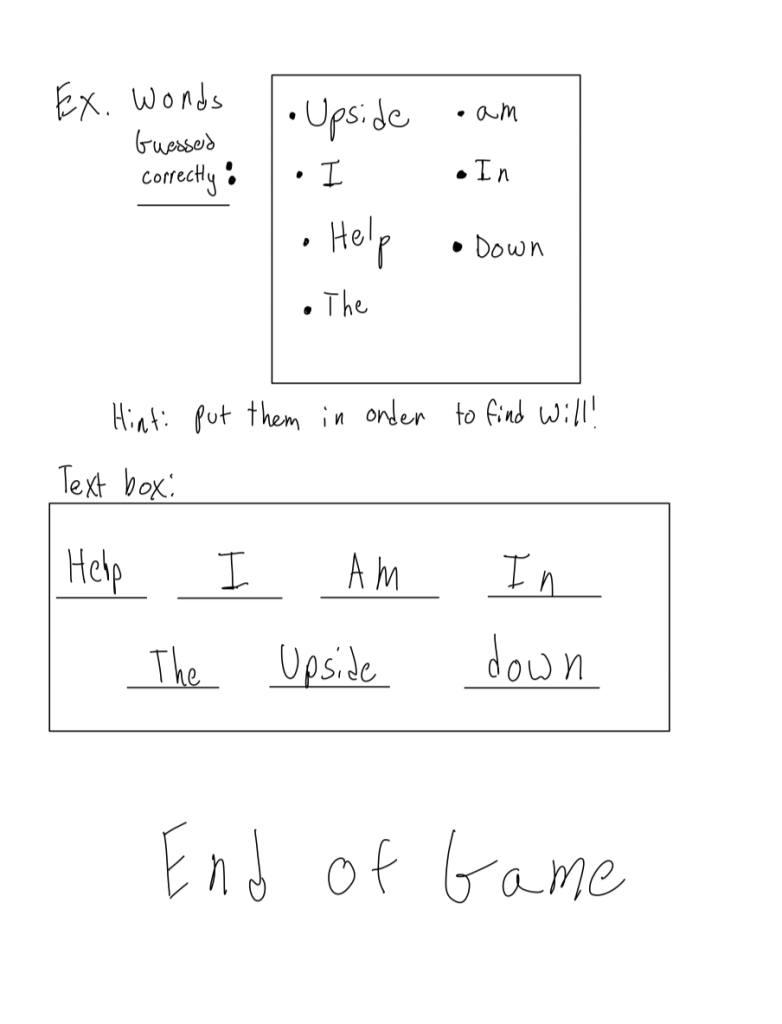

To translate our vision into a playable game, we planned every aspect of the gameplay with detailed road maps. These plans outlined the journey from the welcome screen to the various end-game scenarios, with clear instructions for programming Sadie’s and Sam’s interactions and specific conditions for winning or restarting the game.

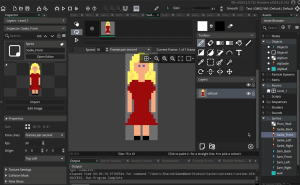

Program Selection – After evaluating various options, we carefully selected GameMaker Studio 2 to develop our game. This decision was driven by the specific needs of our project, particularly the demand for a program that excels in 2D game development with comprehensive features such as sprite and animation management, and a drag-and-drop interface that simplifies complex game development tasks.

Sprite and Background Design – Our first real step in the game design process was designing the sprites. After discussions on the visual style we wanted to achieve, Henry meticulously crafted detailed sprites for Sam and Sadie, and a nurse. Sam and Sadies’ sprites included profiles for multiple directions (front, back, right, left) to support a more fluid gameplay experience. Henry utilized GameMaker’s sprite editor to layer colors and add depth, ensuring the characters stood out against the background.

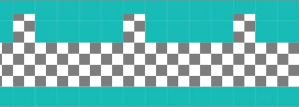

Background design followed, with specific attention to interactive elements like doorways, which are vital to the game’s aesthetics and mechanics. In designing the background, we aimed to mirror the ambiance of a hospital by incorporating elements such as checkered floors and numerous doorways while also integrating a bright wall color to create a more engaging atmosphere for the game.

Character Movement – For character movement, we programmed Sadie with basic directional controls (right, left, up, down), while Sam’s NPC movement (right, left) included pathfinding and collision interactions with walls. We also programmed character interactions, such as collisions between Sadie and Sam.

Challenges

GameMaker Studio 2 & Collaboration – One significant challenge we faced was GameMaker’s limited support for real-time collaboration across separate computers. This limitation posed difficulties in our early development process, as it restricted the ability to simultaneously edit our design game elements as a group. In an attempt to overcome this limitation, we tried to integrate Git to share game files between different computers. We successfully created a repository and separately downloaded the programs; however, integrating Git with GameMaker proved more difficult than anticipated, and we had to look at other options. Instead, we used Henry’s computer to install GameMaker Studio 2 and scheduled in-person meetings to collaborate on programming the game.

Complexity of Wall Collisions – Handling collisions, especially with walls, introduced a new layer of difficulty for our group. Initially, our characters would react unpredictably upon hitting a wall – sometimes getting stuck, other times passing through as if the barrier didn’t exist. We initially suspected it was a boundaries issue, but our many attempts to edit didn’t yield the results we were looking for.

We had to program walls not only to stop character movement but also to influence it depending on the game’s physics. For example, when Sam (the NPC) hit a wall, he needed to turn around and bounce back.

Score/Countdown Timer Error – The most challenging aspect we faced – and one that caused errors even after game creation – was implementing the countdown timer that updated Sadie’s logged hours. The finished game was meant to work as follows: a countdown timer starting at 609 seconds would begin at the start of the game; when Sadie reached the nurse’s desk, the timer would stop, adding the remaining seconds from the timer to the player’s score. The player’s score was meant to be cumulative, meaning that if they did not get the necessary score of 609 from their first playthrough, they would need to play again. For example, if Sadie finished the level with 598 seconds left on the timer (which translated to hours on the score sheet), this would be her starting score for the next level. When the next level starts, the points will be cumulative, but the timer will restart at 609 seconds. Additionally, Sam’s speed would increase, making the game more difficult at each level.

The root of our problems came from updating the score based on the amount left on the countdown timer. Due to GameMaker’s limited drag-and-drop ability, we were unable to update the persistent (cumulative) score variable with the temporary (resets each level) timer variable. Our limited knowledge of how to stop the game’s clock and, therefore, stop the timer prevented us from storing the remaining timer value in the score variable, as GameMaker could not update the score variable with a constantly changing integer. We tried storing the timer’s value at the exact moment Sadie reached the nurse’s desk using an intermediary variable, but this, too, failed to resolve the issue. If we had an expert in GameMaker on our team, we certainly could have fixed this error; however, over several days and many YouTube tutorials watched, the error persisted. Ultimately, we had to accept this unresolved challenge, deferring its solution to the future.

Successes

Sprite Design – One of our major successes was our work on sprite design. Our sprite designer, Henry, decided to craft each sprite from scratch instead of relying on premade options available in GameMaker. This decision allowed for complete creative freedom to personalize Sam and Sadie. The detailed and unique sprites Henry created added a distinct visual appeal to our game, enhancing the overall player experience.

Movement Mechanics – Another satisfying achievement was mastering the basic movement mechanics. This fundamental aspect of our gameplay had to be smooth and responsive to ensure a satisfying gameplay experience. After a series of interactions and adjustments (and with a lot of help from Lizzie), we managed to program movements for Sadie that were not just functional, but simple and fluid.

Collaboration – These successes were not just about getting certain aspects of the game right; they were milestones that reflected our growth as game developers. Each sprite and every movement that worked were testaments to our evolving skills and deepening understanding of game design. We celebrated every small achievement. These successes propelled us forward, encouraging us to tackle more complex interactions within our game.

In addition to technical achievements, another area of success was our ability to work as a cohesive team despite the initial challenges with collaboration tools. We found ways to streamline our communication and workflow, ensuring that everyone was on the same page and could contribute effectively to the project. This collaborative spirit was crucial to overcoming the obstacles we faced.

Final Takeaways

- The limitations of the free version of GameMaker Studio 2 initially slowed down our progress. In any future projects, we plan to invest in the paid version of the software, which offers enhanced features for real-time team collaboration.

- The varied skills within our team played a pivotal role in our project’s development. For example, Lizzie’s programming expertise and Henry’s talent for design provided a balanced approach to tackling technical challenges. Sterling’s documentation was crucial in articulating our process in the game design document, while Alex’s creativity shone through in creating a compelling game trailer that captured the essence of the game’s story.

- One of the key lessons we learned from this project is the importance of persistence and continuous learning. Game development is a field that is constantly evolving, and being adaptable and eager to learn new skills is crucial. Sometimes, it was difficult to find a tutorial to aid our programming difficulties, so it was essential for us not to give up and try different approaches on our own.

Credits

Game Development: Henry, Lizzie

Game Design Document: Sterling

Game Trailer: Alex

Link to Game Trailer: OMISSION – Game Trailer

You must be logged in to post a comment.